Hey Siri! Explain NLP in AI.

- Aastha Thakker

- Oct 28, 2025

- 4 min read

Artificial Intelligence is a branch of computer science by which we can create or train computers or software into intelligent machines which can behave like a human, think like humans, and are able to make decisions.

To understand things, we tend to break down big words and understand their meaning. Similarly, when you break ‘artificial’ (man-made) and ‘intelligence’ (thinking power), it basically means “man-made intelligence”.

Artificial Intelligence (AI) refers to the development of computer systems capable of performing tasks that required human intelligence such as recognizing speech, making decisions, and identifying patterns. This can be achieved through techniques like Machine Learning, Natural Language Processing, Computer Vision and Robotics.

Some of the very common examples of AI are:

ChatGPT: Uses large language models (LLMs) to generate text in response to questions.

Google Translate: Uses deep learning algorithms to translate text from one language to another.

Netflix: Uses machine learning algorithms to create personalized recommendation.

Siri or Google assistant: It uses NLP and ML to provide satisfactory outputs.

Text editors or Autocorrect: It uses deep learning, ML, and NLP to learn and provide recommendation.

And Now-a-days its literally everywhere, look around yourself and you will find another 10 examples for sure.

Different areas or subfields under the AI are:

1) Supervised Learning: In supervised learning, the algorithm is trained on a labelled dataset, where the input data is paired with corresponding output labels. E.g.: Email Spam Filtering; Supervised learning algorithms can be trained on a labelled dataset of emails (spam and non-spam). The algorithm learns to classify new emails as spam or not based on the patterns it identified during training.

2) Unsupervised Learning: Unsupervised learning involves training algorithms on unlabeled data, and the system tries to find patterns or relationships within the data without explicit guidance. Network Anomaly Detection; Unsupervised learning algorithms can analyze network traffic patterns without predefined labels. Any deviation from the normal behavior can be flagged as a potential anomaly, indicating a security threat.

3) Reinforcement Learning: Reinforcement learning involves training an algorithm to make sequences of decisions. The system learns by receiving feedback in the form of rewards or penalties. E.g.: Intrusion Detection and Response; Reinforcement learning can be used to train systems for adaptive responses to cyber threats. The system learns over time based on feedback (rewards or penalties) from successful or unsuccessful defense against intrusions.

4) Natural Language Processing (NLP): NLP focuses on the interaction between computers and human language. It involves tasks like text and speech recognition, language translation, sentiment analysis, and more.

i. Phishing Detection: NLP algorithms can analyze email content to identify patterns associated with phishing attempts. They examine the language used in emails, looking for indicators of fraudulent activity or attempts to deceive users. NLP helps in flagging and blocking phishing emails before they reach users’ inboxes.

ii. Chatbot Security Assistance: NLP-driven chatbots can provide real-time assistance for IT security queries.

iii. Security Information and Event Management (SIEM): NLP is used to process and analyze logs, alerts, and incident reports in cybersecurity.

5) Robotics: Robotics in AI involves the application of artificial intelligence to the design, construction, and operation of robots. It encompasses tasks such as perception (sensing the environment), decision-making, and movement. E.g.: Autonomous Security Robots; They use perception to sense the environment, make decisions based on predefined security protocols.

In this blog our focus will be on NLP.

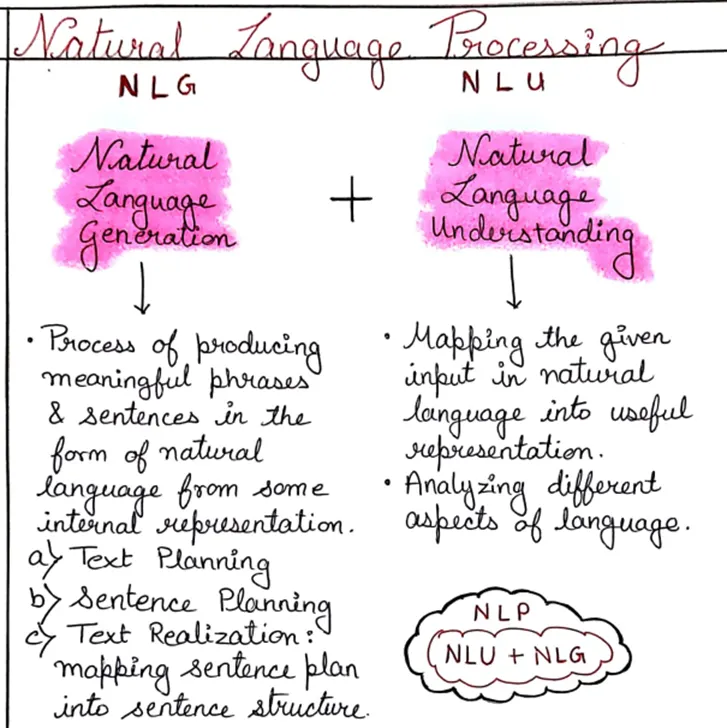

Components of NLP:

Natural Language Processing (NLP) is a subset of Artificial intelligence which involves communication between a human and a machine using a natural language than a coded or byte language.

a. Natural Language Understanding (NLU) is an area of artificial intelligence to process input data provided by the user in natural language say text data or speech data.

b. Natural Language Generation (NLG) is a sub-component of Natural language processing that helps in generating the output in a natural language based on the input provided by the user.

As we saw above, NLP is the branch of AI that gives the ability to machine to understand and process human languages.

Advantages of NLP are:

Analyze both structured and unstructured sources.

Fast and time efficient.

End-to-end exact answers to the questions.

Automation of Repetitive Task

Insightful data analysis, it includes customer feedback, social media, user reviews etc.

Disadvantages are:

For Training NLP model, a lot of diverse data and computations are required.

Integrating NLP into existing systems can be complex and can lead to compatibility issues. So, it may require significant resources.

Training NLP models contains biases which can eventually amplify, leading to unfair outcomes.

Handling multiple languages in a single NLP model can be challenging.

Difficulties in handling rare words.

Concepts and techniques which fall under the broader umbrella of Natural Language Processing (NLP), that focuses on the interaction between computers and human language are:

Tokenization is the process of breaking a text into individual units, typically words or phrases, known as tokens. These tokens are the building blocks for subsequent analysis. For e.g.; “Hey” “there!” “I” “am” “Aastha.” Here each word acts as a token.

Stemming is the process of reducing words to their base or root form by removing suffixes. It aims to group words with the same root, even if the resulting stem is not a valid word. For e.g.; affectation, affects, affected, affection all these words originate from a single root word i.e. “affect”.

Lemmatization is the process of reducing words to their base or root form, called a lemma. Unlike stemming, lemmatization ensures that the resulting lemma is a valid word. It does morphological analysis of the word. Output of lemmatization is a proper word. For e.g.; gone, going and went should be mapped under “go”.

Part of Speech (POS) tagging involves assigning a grammatical category (noun, verb, adjective, etc.) to each word in a sentence, helping to understand the syntactic structure. For e.g., “And now NOT completely different”. “and” is coordinating conjunction(cc), now and completely are adverbs (RB) and different is adjective (JJ)

Named Entity Recognition identifies and classifies entities (such as names, locations, organizations) in a text. For e.g.; Vidhit is working in Google. Here Vidhit is a “person” and google is an “organization”.

Chunking involves grouping words into chunks based on their part of speech. It helps in identifying phrases or meaningful units in a sentence. For e.g.; “Hey there! I am Aastha.” Together itself is a big chunk.

Comments